The idea of an AI that actually knows it exists sits right at the intersection of engineering, philosophy and psychology. For almost a century we've been circling around it in films like Metropolis, 2001: A Space Odyssey, Blade Runner and Ex Machina, and in classic books like I, Robot and Neuromancer.

Today, large language models can write essays, design interfaces and hold convincing conversations. Some researchers even publicly speculate about AI consciousness. Others argue this is pure hype. As a design and product studio working with AI systems, we're less interested in sci-fi headlines and more in a practical question: what happens when AI stops just processing inputs and starts living in an environment – with a body, sensors and its own experience of the world?

That's where concepts like Physical and Embodied AI and systems like NVIDIA Cosmos enter the picture.

Black-Box Intelligence vs. Real Understanding

Modern AI is already strange enough. Deep learning models – including LLMs – often behave like black boxes: they show patterns of reasoning, abstraction or intuition their creators didn't explicitly program, and can sometimes surprise the very teams who trained them.

At the same time, most AI researchers are clear on one point: today's systems do not have consciousness or inner experience in a human sense. They simulate understanding by pattern-matching on huge datasets and optimizing a loss function. Recent work in philosophy and AI even argues that conscious AI as people imagine it is fundamentally incompatible with current algorithmic approaches.

So why are serious labs and universities suddenly talking about consciousness again? Partly because our models are getting better at mimicking human reasoning and introspection, partly because we're hitting the limits of more data, bigger models as a path to general intelligence. That pushes the field toward the next big frontier: embodiment.

Why a Body Matters: From LLMs to Physical AI

If you look at human consciousness through a cognitive science lens, it's not just about clever thoughts. It's about continuous perception, acting in the world and seeing consequences, and building a stable sense of me over time. We do that through a body: a sensorimotor loop that constantly feeds the brain with data to interpret, predict and respond to.

Most AI systems don't have that. They train on static datasets (text, images, code), optimize for accuracy on benchmark tasks, and don't move through a world, get feedback and adapt in real time. That's what Physical and Embodied AI tries to change. Instead of just processing inputs, an embodied agent lives in an environment (real or simulated), perceives, acts, and updates its internal model of the world, and learns through trial and error, not just labeled data.

Designing such systems blends robotics, simulation, computer vision, reinforcement learning and generative models. It's less about answering questions and more about learning to exist in an environment. This doesn't magically give AI a self, but it does move us from smart autocomplete toward agents that behave more like animals or humans in some narrow contexts.

NVIDIA Cosmos: A "Dream Factory" for Robots

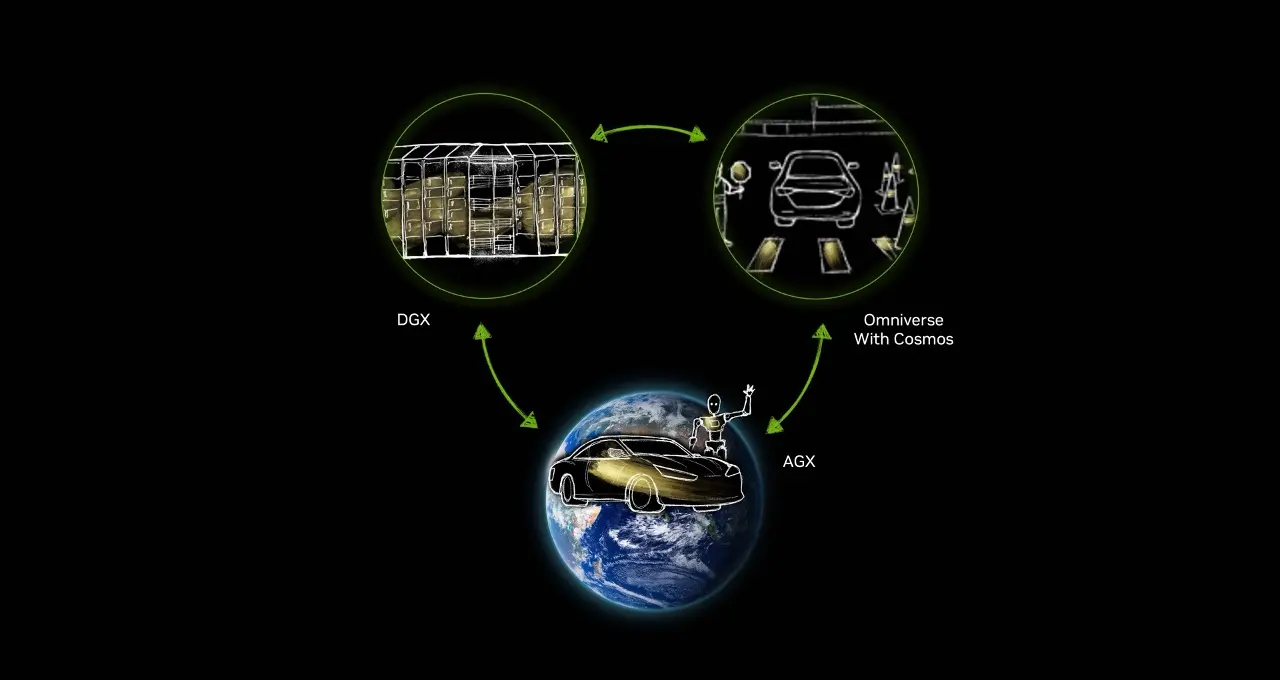

One of the more interesting recent projects in this space is NVIDIA Cosmos – essentially a large-scale platform where embodied AI agents can learn inside high-fidelity simulations before being deployed into the physical world. Cosmos ingests huge amounts of video and sensor data from robots, autonomous vehicles and the real world. It builds a richly detailed virtual environment where AI agents can move, experiment and fail safely. Different models handle prediction (what happens next?), visual realism and physical plausibility (is this scene even possible?).

You can think of it as a dream factory for robots. The system generates thousands of hypothetical scenarios (similar to dream sequences). Agents play through these scenarios, trying actions, making mistakes and updating their policies. Then they wake up and test the learned behavior in more realistic settings, with the loop repeating.

Another line of research, from companies like Cortical Labs, goes in an even wilder direction: growing living neurons on chips (DishBrain) and letting them learn to play games such as Pong by interacting with a digital environment. Taken together, these experiments suggest a future where AI is not just a text box in a browser, but a physically grounded agent forming its own, increasingly complex world model. Does that mean consciousness? Not necessarily. But it makes the question less abstract.

Conscious AI: Science, Hype and Real Risks

Here's the uncomfortable part: as soon as AI starts looking more autonomous, humans start treating it like a person. We anthropomorphize everything with seemingly meaningful responses – from chatbots to Roombas. Some researchers even talk about non-zero probabilities that advanced models could have proto-conscious states – while others strongly disagree and see this as branding, not science. Regardless of who's right, there are practical risks we need to treat seriously now.

First, misplaced trust and attachment. People can form emotional bonds with chatbots and robots. If we over-market systems as aware or sentient, we increase the chances of unhealthy dependence and psychological harm when those illusions break.

Second, ethical status of advanced agents. If we ever build systems that plausibly meet some scientific criteria for consciousness, we'll have to ask hard questions: can they suffer? Is turning them off ethically neutral, or closer to killing a sentient being? Recent open letters by AI and ethics researchers warn we might stumble into this space by accident and call for constraints on research that deliberately aims at conscious AI.

Third, black box plus autonomy. Highly capable, partly opaque systems embedded in physical environments (cars, hospitals, factories) amplify classic safety concerns: unexpected behaviors, reward hacking and goal-misgeneralization, difficulty auditing why something happened.

Fourth, policy lag. Law and regulation are still catching up with non-conscious AI. Consciousness talk can easily distract from current, concrete problems like bias, surveillance and labor displacement.

At the same time, embodied AI research has legitimate upside: safer, more adaptable robots in logistics, healthcare, disaster response, better simulations for urban planning, climate and mobility, and new tools to study human cognition by trying to recreate aspects of it in machines. For us as a studio, the key is to keep those benefits while staying honest about what AI is not.

Pragmica's View: Design for Reality, Not for Sci-Fi

At Pragmica, we sit in a very practical spot between design, engineering and strategy. Here's how we approach this whole conscious AI conversation in real projects.

We assume zero consciousness – and full responsibility. We treat current AI as sophisticated tools, not colleagues with feelings. That means we, and our clients, own the ethical and business consequences of their behavior.

We design against over-anthropomorphizing. In interfaces, we're careful with tone, avatars and metaphors. We avoid UI patterns that push users into believing there's a person in there when there isn't. That includes being explicit about limitations and failure modes.

We lean into transparency, not mystique. Wherever possible, we surface what data is being used, what the model can and can't do, and how confident it is in a given output. This is especially important in products for finance, healthcare, education and HR.

We explore embodied and agentic AI where it actually matters. For robotics, gaming, simulation and spatial interfaces, embodied AI is not a philosophical toy – it's a UX and safety requirement. Machines need a robust world model to move through our environments without hurting anyone.

We keep humans at the center of the loop. Even as agents get more autonomous, we design systems where goals and constraints are set by people, critical interventions are human-controlled, and feedback flows both ways (humans learn from AI, and vice versa).

So, Do We Need Conscious Machines at All?

Right now, the honest answer is: probably not. We don't need subjectivity to get huge economic and social impact from AI. We do need better alignment, robustness, interpretability and good design around these systems. And we definitely need clearer language – separating what models do from what we project onto them.

Embodied platforms like NVIDIA Cosmos or neuron-on-chip experiments are fascinating partly because they push us to formalize ideas like perception, prediction, agency and self-modeling. In trying to teach machines to understand the world, we're forced to understand ourselves a little better.

As a studio, that's where Pragmica wants to be: not building artificial souls, but designing tools, products and experiences that respect human minds – with all their biases, hopes and vulnerabilities – while using AI in the most grounded, responsible way we can.